-

News

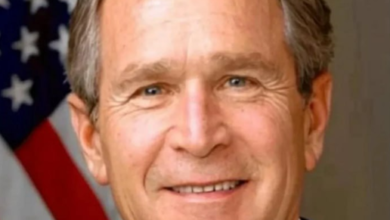

Breaking – Sad News About George W Bush!!

Former President George W. Bush has been in the public eye for decades, but every so often, an unexpected moment…

Read More » -

Houses

Mountain Serenity Investment Opportunity

Nestled in the scenic hills of Wallins Creek, this rare multi-unit property presents an extraordinary opportunity to own 33 pristine,…

Read More » -

News

What “Cement Face” lady looks like 21 years later

Rajee Narinesingh became widely known as “Cement Face” after undergoing black market plastic surgery in the mid-2000s, performed by the…

Read More » -

Hello world!

Welcome to WordPress. This is your first post. Edit or delete it, then start writing!

Read More »